NSERC Peer Review System is Broken for Mathematics

Anomalous results of the 2011 NSERC Discovery Grants competition in mathematics have provoked a loss of confidence in the NSERC peer review system. To avoid a substantial loss of Canada’s scientific talent, which has been enhanced through the Canada Research Chairs program and other spectacular hiring over the past ten years, scientific policymakers need to quickly fix the broken peer review system. In the absence of an effective peer review process setting the strategy for research investment, Canada will miss out on the rewards made over the past decade’s recruitment of scientific talent.

What is happening in other sciences? Anecdotal reports from the following sources suggest the anomalies are not restricted to the math department at Toronto:

- Toronto: MATH, CHM, EEB, PHY, STA, Engineering

- UBC: CS, MATH

- Queens: MATH

In 2007, NSERC commissioned a review by an international committee culminating in this report. (Please find my annotated version here and a marked up version of the NSERC Management response to the 2007 International Review Committee Report.) This report made recommendations leading to fundamental changes in the peer review process for all disciplines starting in 2009. The implementation of these changes (involving the so-called conference model and binning system) and other forces have provoked a loss of confidence in the peer review process at NSERC among mathematicians at Toronto, and across Canada.

Toronto Math Results are Anomalous

The results (names omitted) of the 2011 NSERC Discovery Grants Competition for the Department of Mathematics at the University of Toronto are anomalous:- Professor A. \$29k/y to \$18k/y

- Professor B. 40 to 15

- Professor C. 42 to 30 to 42 to 18

- Professor D. 26 to 18

- Professor E. 40 to 40

- Professor F. 38 to 47

- Professor G. 0 to 0

- Professor H. 15 to 13

About Professor C.

Consider the case of CMS award winning Professor C. In 2010, this researcher’s grant was cut from 42 down to 30. After an appeal, the grant was reinstated for one year back to 42. In this year’s competition, one year after the appeal, NSERC drops it to 18, a 57% cut. Meanwhile, Professor C’s frequent collaborator (each had more than 50% overlap of their research with the other during 2006-2011), Professor K., received 45 staying at 100% of the previous level in this competition. Will the real opinion of NSERC on Professor C’s research please stand up? Professor C’s story is quite similar to Don Fraser’s personal account. (Within the conference model, I understand that a different group of only 5 experts may have reviewed the proposals of Professors C and K. This remark can account for the inconsistency but reveals aspects of larger issues that need to be fixed.)About Professor B.

Imagine running a successful research operation (success, former students get awards, 13 major pubs in 2006-2011) for the past five years like Professor B using 45K/y. Students are in the pipeline; postdoc candidates have been scouted; Professor B has new ideas. NSERC rewards this person with a drop from 45 down to 18, a 60% cut. This researcher is confused with the outcome: “What did I change? What should I have done differently?”About Professor G.

This person is a (perhaps the) world leading expert on a substantial research area. It seems this person, despite spectacular research success, is unworthy of a Discovery Grant because they don’t produce enough students. It is as though Canada has a sports car and they don’t put tires on it.Secondary Effects Scenarios

Mathematicians, of international calibre, with a steady research production stream and surrounded by young researchers have had their grants slashed by nearly 60% during the 2011 NSERC Discovery Grants Competition.- Now, imagine you are an assistant professor in Canada. You might have nice support right now, like a Sloan or an ERA. You are building a research group, spending money on HQP, scouting talent. But your funding has a finite time horizon and the Discovery Grants look unstable, unpredictable. So, would you leave Canada if you could? Fix NSERC or the young talent will leave Canada.

- Now, imagine you are a recently recruited Canada Research Chair: if you just concluded that your junior faculty member might be wise to leave Canada, how do you see your department in 10 years? Would you leave Canada if you could? Fix NSERC or the CRCs will leave Canada.

There are (at least) two main problems:

- Math in Canada is treated unfairly compared to other disciplines

- The Peer Review system is broken

Math in Canada is treated unfairly

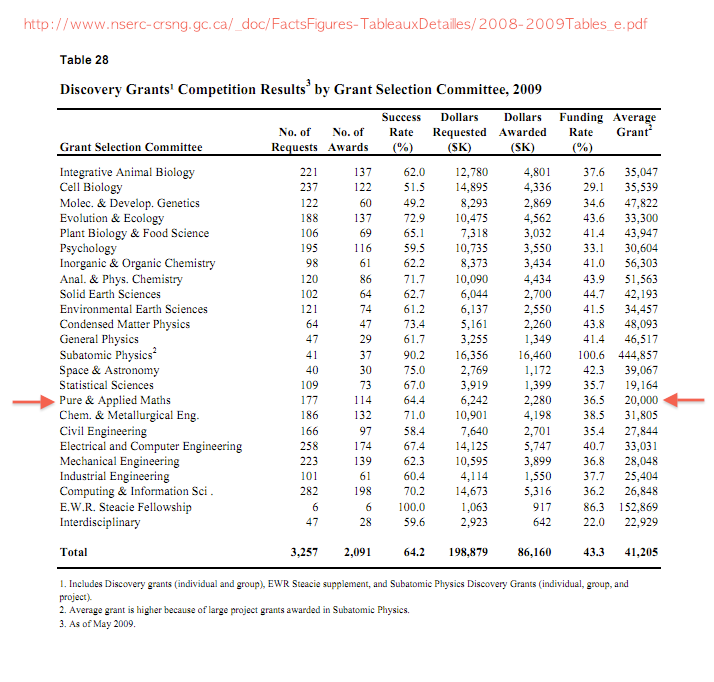

The main problem with mathematics funding in Canada is the amount invested is too low. I’ve written about this before. Consider the data from 2009 of NSERC Discovery Grants (2010 is similar, 2011 is not available) over the disciplines:

The average math and stats grant is $20K/y while the average over all disciplines is $41K/y. Why is it that the average scientist in Canada can expect more than double the amount a Canadian mathematician can expect? Keep in mind that Discovery Grants are primarily used to fund research personnel not expensive labs.

David Wehlau’s data (posted and discussed here) reveals the trend: over the past twenty years, mathematics investment as a percentage of the total amount in Discovery Grants funding has declined from nearly 4% down to 2%. Math has received less and less funding compared to other disciplines. This subtle reallocation needs to be abruptly reversed.

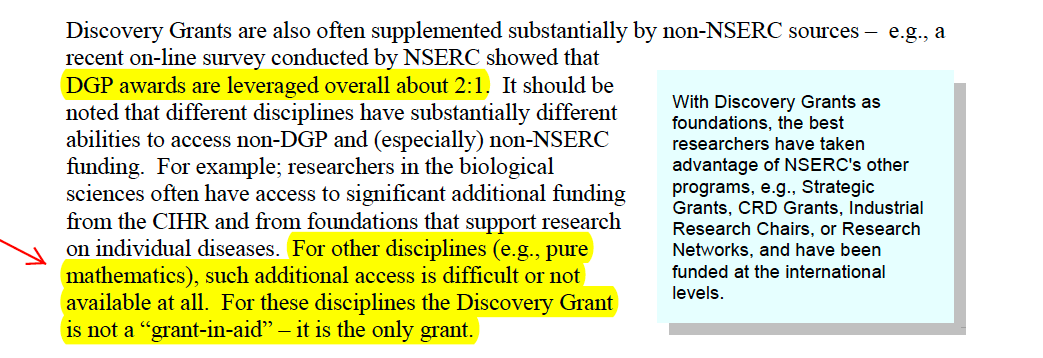

The unfairness then multiplies. Consider the following snippet from the 2007 international review report:

Other disciplines are benefitting more from other industrially targeted NSERC programs and other sources compared to pure mathematicians. NSERC views Discovery Grants as grant-in-aid: a precursor grant leading to other sources of funds. The international review committee reports that is not the case for mathematics AND mathematicians receive on average less than half the funds received by other scientists. This is an implicit funding reallocation away from mathematics toward other disciplines and is unfair.

Other disciplines are benefitting more from other industrially targeted NSERC programs and other sources compared to pure mathematicians. NSERC views Discovery Grants as grant-in-aid: a precursor grant leading to other sources of funds. The international review committee reports that is not the case for mathematics AND mathematicians receive on average less than half the funds received by other scientists. This is an implicit funding reallocation away from mathematics toward other disciplines and is unfair.

Broken Peer Review System

The outcome of the 2011 competition, and consistent reports (like Don Fraser’s) from the past two years, have provoked a loss of confidence in the peer review system at NSERC. To rebuild trust and avoid the departure of talented scientists, the mathematics community of Canada needs to know what happened in 2011. We need to understand why the peer review system produced the 2011 funding allocations.There has been considerable chatter in the mathematics community about the 2011 competition. However, we need people with official roles to speak officially at this time. In addition to the forthcoming data from NSERC, I hope that Section 1508, the Mathematics and Statistics Evaluation Committee will explain the 2011 evaluation process and actively participate in discussions leading to an improved system that regains the confidence of mathematicians and statisticians working in Canada. What happened? How can we fix it?